Two emerging harassment campaigns on Twitter show why social media companies’ approaches to content moderation do not address how harassment spreads online, and how it imperils those targeted, chilling their speech.

Networked harassment, or when accounts function as hubs that can direct their followers to harass specific targets, is not a new phenomenon, but has long been a fundamental part of how abuse works on Twitter. What is new is how banned users are returning to the platform under a general amnesty, despite having been removed previously because they violated Twitter’s policies. Formerly banned users are ratcheting up harassment campaigns, making such abuse worse.

Influential Twitter users do not need to engage directly in hate and harassment to cause harm. Because Twitter moderation focuses on holding individual accounts responsible for harmful content, it frequently misses how influential accounts with large followings actually operate. This can be referred to as “stochastic harassment”: weaponizing talking points that incite others to harassment without being a harasser.

Stochastic harassment is not unique to Twitter. It happens across social media, but it specifically violates the spirit of Twitter’s hateful conduct policy, if not the policy itself.

A good example of stochastic harassment is what transpired before the sudden dissolution of Twitter’s Trust & Safety Council on December 12, 2022. Three council members had resigned in a letter posted on the platform, citing the decline in users’ safety and well-being since Elon Musk acquired Twitter.

Mike Cernovich, a conspiracy theorist known for pushing the Pizzagate story in 2016, replied to the resignation letter and said they should be jailed, attaching a link to a story about child pornography on Twitter.

It should be noted that the council comprised a group of independent experts worldwide who volunteered their time to advise Twitter on its products, programs, and rules. Its members were not directly involved in any of Twitter’s decisions.

Lara Logan, a former “60 Minutes” and Fox News journalist who recently promoted an antisemitic blood libel conspiracy theory in an interview on the far-right cable channel Newsmax, quote-tweeted the letter. She alleged that the three former members had been complicit in failures to combat child sexual abuse material (CSAM) and needed to be “held accountable.” Musk replied directly to Logan and agreed, unleashing a flurry of harassment and threats directed at the three individuals.

Neither Cernovich nor Logan themselves made any direct threats against the former council members. However, the two became vectors for harassment by framing the resignations as somehow tied to a CSAM conspiracy, thereby making the connection to a well-worn extremist talking point. A number of Twitter users ran with this thread, harassing the former council members in their mentions and sending unsolicited direct messages to them.

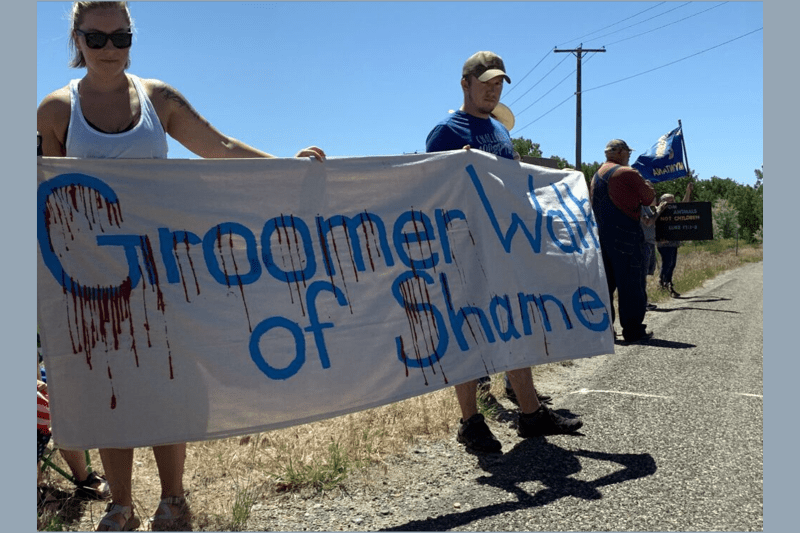

Another example of stochastic harassment is the recent incident perpetrated by Gays Against Groomers, a group that describes itself as “a coalition of gays against the sexualization, indoctrination, and medicalization of children,” demonstrates how influential Twitter accounts become vectors for mass harassment. Since its creation in July 2022, Gays Against Groomers’ account has amassed over 145,000 followers and promoted anti-LGBTQ+ talking points, particularly conspiracy theories about elementary school curricula and drag queen brunches. In September, Google, PayPal, and Venmo banned the group from their platforms.

For the most part, Gays Against Groomers quote-tweets other Twitter accounts and shares links to news stories that support its conspiratorial agenda. In this way, it functions as a clearinghouse for hate and harassment. On December 7, Project Veritas, a far-right activist group whose Twitter account suspension was reversed on November 20, released a deceptively-edited “undercover video” of the dean at Francis W. Parker School, in Chicago, discussing sex ed curricula. Gays Against Groomers retweeted the video, as did other anti-LGBTQ+ accounts, including Libs of TikTok, which has been linked to threats against children’s hospitals across the United States. Libs of TikTok noticed that the Chicago school had deleted its Twitter account shortly after Project Veritas released its video. It is unclear why the school deleted its account, though it may have been harassed by users who had seen the Project Veritas video. Gays Against Groomers quote-tweeted Libs of TikTok and added, “We hope they know that none of us will stop coming after them just because they left Twitter.” Lara Logan also quote-tweeted Project Veritas, leading to her followers calling the dean a “predator,” a “pedo,” and a “groomer.”

Neither Gays Against Groomers nor Libs of TikTok have violated Twitter’s hateful conduct policy, which prohibits directly attacking or threatening other people. Each account tweeted the video without making explicit threats or attacks. And yet damage still was done.

These harassment campaigns illustrate the need for Twitter’s content moderation approach to align more closely with the reality of online hate and harassment, made even more pressing as previously-banned accounts continue to come back online under this new “amnesty” policy.

Elon Musk has said he wants a broader range of views on Twitter and that it should not be a “free-for-all hellscape, where anything can be said with no consequences!” To make this work, Twitter’s policies should recognize that there is a difference between expressing viewpoints and implicitly or explicitly calling for harassment of individuals you disagree with. As we see from these cases, stochastic harassment must be addressed in the spirit of facilitating a platform that encourages free speech without amplifying hate and incitement.